What hit me was that as I am using LVM spawning over several disks, the failure of one disk will drag the others along and the entire LVM volume will be lost, a particular unpleasant alternative, especially as the myth-tv recordings are not regulrarly backed up (mostly Simpsons, Star Gate and Alias). I decided to dig into the world of RAID.

I started to analyse the disk usage to get a full picture of our needs.

Size Used Free

[GB] [GB] [GB]

Volume Group lvm_video

video 931 867 65

Volume Group opus_main

lvm_users 200 24 177

lvm_audio 300 227 74

lvm_lager 500 359 142

lvm_video 900 570 331

opus_home 30 19 12

1930 1199 736

Totals 2861 2066 801

The suprise was that by using different file systems for different purposes (video, music, etc) we have a slack of 800GB, unused space just there in case it should be needed. On the other hand, making one big file system shall be well though through, on disturbance and everything might be lost – we wouldn't want that do we. And when the file systems are in the size of Tera Bytes, the file systems starts behave strange and slow – the choice of file system becomes important.

When I found some “green” 2T har disks for a campaign price I decided to make the plans happened. I purchased 4 * 2TB disk for the array.

Solaris

Back in the 90:th I was very found of Sun-OS and Solaris and my first assumption was to setup the server using OpenSolaris and ZFS. Sorry to say I found that OpenSolaris seems no longer supported by Oracle so I decided to go for Open Indiana – the community driven Solaris.

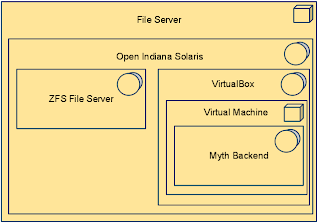

To solve my problems I planned the following setup:

To solve my problems I planned the following setup:

I made some initial testing and I really liked the ZFS. It just felt good. So did Solaris, felt like a since lost home. I decided to go for the Solaris solution.

First I installed Open Indiana build 148 and tried to make a system update. It failed; “System is a LiveCD” or similar. Had to find a walk around for that (sorry no link, google on the error messange to find solution).

Seting up the ZFS was easy. First step is to create zpools. I made one pool of the 4 disks using RAID-Z, simply RAID-5 but where the write-hole is close. The write-hole is a moment where a power-loss will cause damage to the array in any RAID-5 and, by the sound of it, is closed by integrating RAD and file system as in ZFS.

Second I created some ZFS directories. Those are kind of mount points that can be shared using NFS. Ordinary directories and data resides in the ZFS directory.

Third step was to start moving data from my Linux disks (ReiserFS and LVM) to the Solaris-disks. This turned out to be more problematic than I expected. First Solaris cannot mount a NFS3 share from Linux as Sun have decided to go for a non standard security model. I did some feeble tries to enable NFS4 on the Linux server but gave up and instead I used a Linux workstation to rsync data onto a NFS-mounted ZFS-partition. This was a very sloooow way of transfer 1.7TB data. The storage rate was ridiculous slow, it took like seconds to store a simple small HTML-file. I never got to the huge files like movies but I did the music.

I also found that even though the NFS share had no-root-squash (or root=IP-of-client as it is called in Solaris) it affected the files but not the directories. This results in a load of error during a rsync which I do NOT want as I use rsync for backing up to the server and really need to know the real errors.

Beside this I set-up an mysql server on the Solaris machine which as well turned out to be very slow. Just displaying the tables in a database gave a noticeable response time (even second and third time).

It also turned out to need pretty much more memory than Linux and I only have 2GB in the machine. This was not enough for the Virtual MythBackend server. I started the virtual server on another host (Linux) but with the virtual disk on the original ZFS file system via NFS. Once again things where very slow. This set-up however is not new to me. I do regularly run virtual machines where the virtual disks are mounted using NFS.

At this point I decided to reconsider the Solaris alternative. The drawbacks started to queue up:

First I installed Open Indiana build 148 and tried to make a system update. It failed; “System is a LiveCD” or similar. Had to find a walk around for that (sorry no link, google on the error messange to find solution).

Seting up the ZFS was easy. First step is to create zpools. I made one pool of the 4 disks using RAID-Z, simply RAID-5 but where the write-hole is close. The write-hole is a moment where a power-loss will cause damage to the array in any RAID-5 and, by the sound of it, is closed by integrating RAD and file system as in ZFS.

Second I created some ZFS directories. Those are kind of mount points that can be shared using NFS. Ordinary directories and data resides in the ZFS directory.

Third step was to start moving data from my Linux disks (ReiserFS and LVM) to the Solaris-disks. This turned out to be more problematic than I expected. First Solaris cannot mount a NFS3 share from Linux as Sun have decided to go for a non standard security model. I did some feeble tries to enable NFS4 on the Linux server but gave up and instead I used a Linux workstation to rsync data onto a NFS-mounted ZFS-partition. This was a very sloooow way of transfer 1.7TB data. The storage rate was ridiculous slow, it took like seconds to store a simple small HTML-file. I never got to the huge files like movies but I did the music.

I also found that even though the NFS share had no-root-squash (or root=IP-of-client as it is called in Solaris) it affected the files but not the directories. This results in a load of error during a rsync which I do NOT want as I use rsync for backing up to the server and really need to know the real errors.

Beside this I set-up an mysql server on the Solaris machine which as well turned out to be very slow. Just displaying the tables in a database gave a noticeable response time (even second and third time).

It also turned out to need pretty much more memory than Linux and I only have 2GB in the machine. This was not enough for the Virtual MythBackend server. I started the virtual server on another host (Linux) but with the virtual disk on the original ZFS file system via NFS. Once again things where very slow. This set-up however is not new to me. I do regularly run virtual machines where the virtual disks are mounted using NFS.

At this point I decided to reconsider the Solaris alternative. The drawbacks started to queue up:

- It seems to be very slow on the file system

- It seems to be slow on MySql

- It cannot mount Linux file systems

Linux

So I did reconsider and decided to go for a Linux setup instead. This way I can use the old installation of Gentoo. I went for the following setup:- The root partition is on the old 160GB disk.

- The 4 new 2TB disks are installed as an RAID-5 array.

- The raid-array results in a 5.5 TiB disk, about 6TB (Ti 1024-based and TB 1000-based)

- The array is divided into 28 partitions each 200GiB

- A reasonable amount of partitions is added to a LVM volume (lvm_nas)

- Logical volumes are created for my needs and exported

- All logical volumes are using EXT4 as file system.

There where some issues with the move though.

The first and most scary issue was with the disks. There where something like a GTP partition that fdisk cannot manage, and the GTP was corrupt. Trying out several disk utilities I stumbled on the gdisk utility (GTP fdisk) and learned that you will need a GTP partition table for disks this big.I managed to clear out the Solaris partition information and create the one full-disk partition that is to be included in the RAID.

The first and most scary issue was with the disks. There where something like a GTP partition that fdisk cannot manage, and the GTP was corrupt. Trying out several disk utilities I stumbled on the gdisk utility (GTP fdisk) and learned that you will need a GTP partition table for disks this big.I managed to clear out the Solaris partition information and create the one full-disk partition that is to be included in the RAID.

Creating the RAID array was pretty easy but I discovered that the array is actually built in the background. For my setup it was estimated to take 18h to finish. It did not take that long time. In Gentoo it seems that mdadm is used while the official Raid guide refer to RaidTools.

Once the raid-array in place I created a GTP file table using gdisk. I also created 28 partitions á 200GB to be used as physical volumes in LVM.

To the LVM volume I added space as I need now. There is a lot if unallocated space to use when I need more.

Ext4 takes longer time to create than ReiserFS. How long time file check will take is yet to be discovered. I did however try to grow a mounted EXT4 file system and it worked well but to some extra time. Next time I will probably work on offline file systems.

The MythBacken and MySql works the same as before - it is the same Linux installation. This time MySql have its own logical volume. Backups are mainly made using LVM snapshots; I make a snapshot of the volume that is copied out using rsync.

No comments:

Post a Comment